Generative AI tools like ChatGPT based on Large Language Models (LLMs) have become the hottest topic in the technology world in 2023. The LLM models have evolved significantly over the past few years. In this article, we will trace the timeline of major LLM models and developments, starting with the early days of LLMs and leading up to the latest innovations in 2023.

Early Years: 2017 - 2020

A Language Model is a type of AI model designed to understand, process, and generate human language text. The initial concept of an AI Large Language Model (LLM) was introduced by Google researchers in 2017, and it was in 2018 when the first prominent LLMs were launched.

The 2018 LLM revolution began with the introduction of models that incorporated an unprecedented number of parameters. Parameters are the variables or weights in LLMs that play a crucial role in their performance and sophistication.

It all started in June 2018 with GPT-1, a model with 117 million parameters, laying the foundation for subsequent developments. At the time, few people outside of the AI and machine learning fields noticed the birth of a model that would bring about the first mass consumer AI revolution five years later.

Just months later, in October 2018, another prominent LLM, Google’s BERT, was born. BERT arrived on the scene equipped with 340 million parameters, showcasing its contextual understanding and language representation capabilities. BERT's ability to capture contextual information within text was a significant leap in NLP.

During the early period of LLMs, the competition for the best model largely involved OpenAI’s GPT and Google’s BERT. BERT had clear performance advantages over GPT-1, but not for long. In February 2019, GPT-2 made its debut, boasting an impressive 1.5 billion parameters. It outperformed GPT-1 and excelled in various text-related tasks, often surpassing the capabilities of BERT.

OpenAI didn’t stop at that. In June 2020, the company introduced GPT-3, a model with a staggering 175 billion parameters. GPT-3 was a game-changer, demonstrating remarkable text generation capabilities and an impressive ability for natural language understanding. The leap between GPT’s 2nd and 3rd versions has probably been the models’ biggest performance improvement so far.

Entering Consumer Space

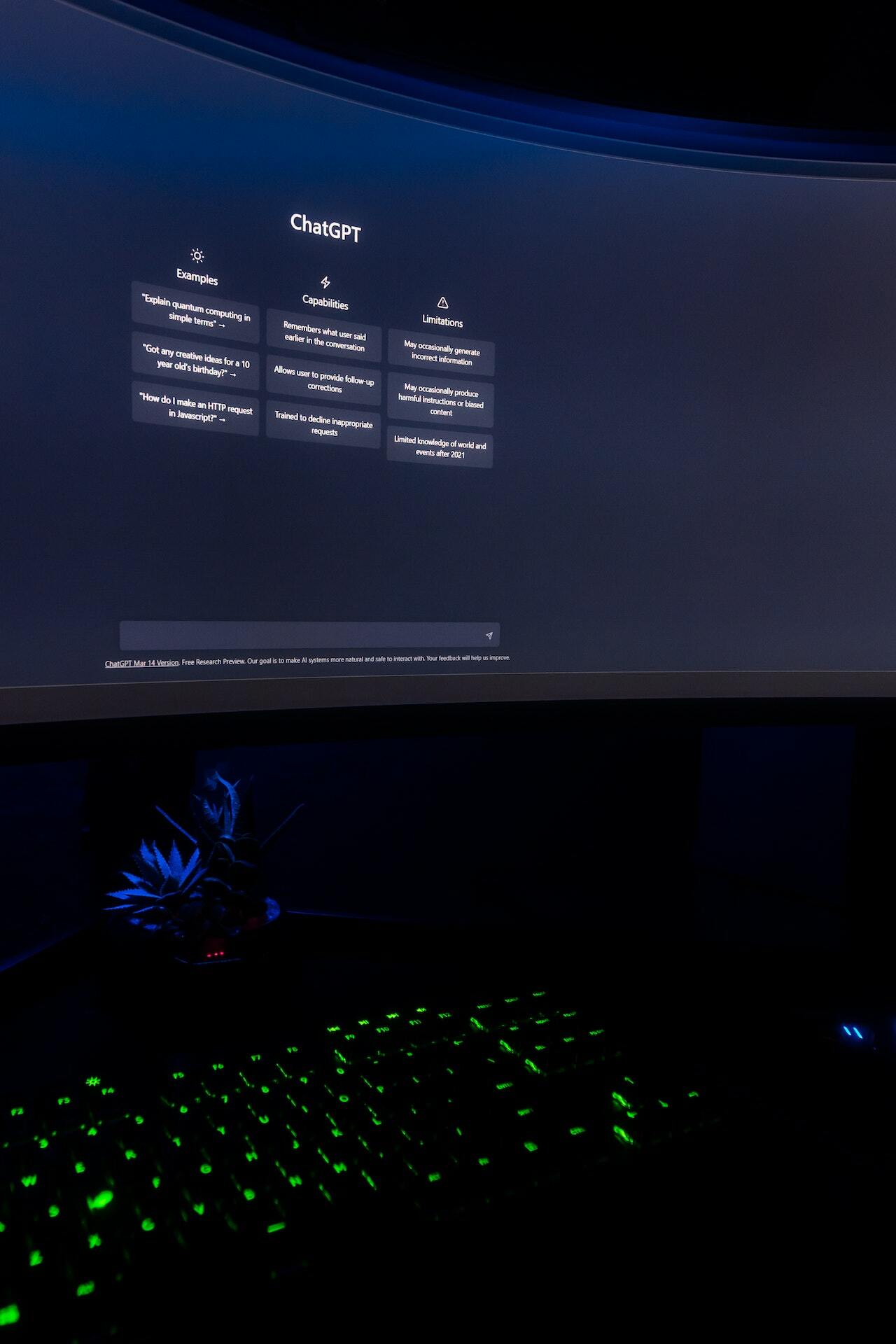

GPT-3 was the LLM that eventually ignited the AI revolution in the consumer space. Although the model was introduced in 2020, it wasn't until late 2022 that generative AI LLMs gained widespread recognition and popularity. In November 2022, OpenAI launched its user-friendly AI chat tool based on GPT-3 - ChatGPT. Sufficiently powerful LLM models had been in existence for a few years by then. However, it was the easy-to-use, intuitive, and well-suited for typical text tasks nature of ChatGPT that helped move LLMs into the mass adoption stage.

2023 Milestones

As ChatGPT continued to take the world by storm, a group of vendors rushed to present and market their own LLM-based AI tools starting in early 2023.

In February 2023, Microsoft introduced its AI chat bot, Bing Chat. Bing Chat itself was based on OpenAI’s GPT model. It was March 2023, however, that proved particularly eventful for the LLM landscape. During the month:

· OpenAI continued to lead the charge with the release of GPT-4, which boasts over 1.7 trillion parameters. This massive increase in parameter count represented another milestone in the generative AI field.

· Google made its Bard AI chat tool publicly accessible. Bard is based on Google’s own LLM called PaLM 2. PaLM’s initial version was Introduced in April 2022, with an estimated 540 billion parameters. Soon afterwards, Bard AI was upgraded with PaLM’s 2nd version, which was launched in May 2023. Google remains evasive on the number of parameters for PaLM 2. Some researchers estimate that it’s likely somewhere between 100 and 200 billion.

· The AI startup Anthropic joined the race with its Claude LLM. The first version (430 million parameters) was introduced in March. This was followed by Claude 2 (860 million parameters) in July. Claude 2, while having fewer parameters than the latest GPT models, excels in handling larger chunks of text at a time.

Role of Parameters in LLMs

Mentioning the LLMs above, we often refer to their number of parameters. While the number of parameters is a significant factor in LLM’s performance, it is not the sole variable. The amount and nature of the training data also play pivotal roles. For instance, smaller models that have been specialised on specific content types, such as financial data or programming code, can outperform larger, more generalised models.

Another key parameter is the tokenisation model and limits used by the model. For example, the higher tokenisation limits used by Claude 2 are the key reason why this LLM outperforms GPT when it comes to interpreting larger bodies of textual information.

While the initial phase of the generative AI revolution was marked by the introduction of models with an increasingly large number of parameters, the focus now shifts toward improving performance and refining the capabilities of these models using other ways.

2023 has so far proven to be the year of the biggest developments for the LLM field. However, after Claude 2’s entry into the game in July, the key developers – OpenAI, Google, Antrophic, Microsoft - have turned their attention to the issues of performance fine-tuning, the safety of the models, and data privacy. Their recently formed alliance – the Frontier Model Forum – focuses on the safe uses of LLM-based tools and the further advancement of the field. New massive model announcements have been lacking in recent months. We have now entered the next stage of the LLM revolution, and few individuals know for certain what is around the corner.

Early Years: 2017 - 2020

A Language Model is a type of AI model designed to understand, process, and generate human language text. The initial concept of an AI Large Language Model (LLM) was introduced by Google researchers in 2017, and it was in 2018 when the first prominent LLMs were launched.

The 2018 LLM revolution began with the introduction of models that incorporated an unprecedented number of parameters. Parameters are the variables or weights in LLMs that play a crucial role in their performance and sophistication.

It all started in June 2018 with GPT-1, a model with 117 million parameters, laying the foundation for subsequent developments. At the time, few people outside of the AI and machine learning fields noticed the birth of a model that would bring about the first mass consumer AI revolution five years later.

Just months later, in October 2018, another prominent LLM, Google’s BERT, was born. BERT arrived on the scene equipped with 340 million parameters, showcasing its contextual understanding and language representation capabilities. BERT's ability to capture contextual information within text was a significant leap in NLP.

During the early period of LLMs, the competition for the best model largely involved OpenAI’s GPT and Google’s BERT. BERT had clear performance advantages over GPT-1, but not for long. In February 2019, GPT-2 made its debut, boasting an impressive 1.5 billion parameters. It outperformed GPT-1 and excelled in various text-related tasks, often surpassing the capabilities of BERT.

OpenAI didn’t stop at that. In June 2020, the company introduced GPT-3, a model with a staggering 175 billion parameters. GPT-3 was a game-changer, demonstrating remarkable text generation capabilities and an impressive ability for natural language understanding. The leap between GPT’s 2nd and 3rd versions has probably been the models’ biggest performance improvement so far.

Entering Consumer Space

GPT-3 was the LLM that eventually ignited the AI revolution in the consumer space. Although the model was introduced in 2020, it wasn't until late 2022 that generative AI LLMs gained widespread recognition and popularity. In November 2022, OpenAI launched its user-friendly AI chat tool based on GPT-3 - ChatGPT. Sufficiently powerful LLM models had been in existence for a few years by then. However, it was the easy-to-use, intuitive, and well-suited for typical text tasks nature of ChatGPT that helped move LLMs into the mass adoption stage.

2023 Milestones

As ChatGPT continued to take the world by storm, a group of vendors rushed to present and market their own LLM-based AI tools starting in early 2023.

In February 2023, Microsoft introduced its AI chat bot, Bing Chat. Bing Chat itself was based on OpenAI’s GPT model. It was March 2023, however, that proved particularly eventful for the LLM landscape. During the month:

· OpenAI continued to lead the charge with the release of GPT-4, which boasts over 1.7 trillion parameters. This massive increase in parameter count represented another milestone in the generative AI field.

· Google made its Bard AI chat tool publicly accessible. Bard is based on Google’s own LLM called PaLM 2. PaLM’s initial version was Introduced in April 2022, with an estimated 540 billion parameters. Soon afterwards, Bard AI was upgraded with PaLM’s 2nd version, which was launched in May 2023. Google remains evasive on the number of parameters for PaLM 2. Some researchers estimate that it’s likely somewhere between 100 and 200 billion.

· The AI startup Anthropic joined the race with its Claude LLM. The first version (430 million parameters) was introduced in March. This was followed by Claude 2 (860 million parameters) in July. Claude 2, while having fewer parameters than the latest GPT models, excels in handling larger chunks of text at a time.

Role of Parameters in LLMs

Mentioning the LLMs above, we often refer to their number of parameters. While the number of parameters is a significant factor in LLM’s performance, it is not the sole variable. The amount and nature of the training data also play pivotal roles. For instance, smaller models that have been specialised on specific content types, such as financial data or programming code, can outperform larger, more generalised models.

Another key parameter is the tokenisation model and limits used by the model. For example, the higher tokenisation limits used by Claude 2 are the key reason why this LLM outperforms GPT when it comes to interpreting larger bodies of textual information.

While the initial phase of the generative AI revolution was marked by the introduction of models with an increasingly large number of parameters, the focus now shifts toward improving performance and refining the capabilities of these models using other ways.

2023 has so far proven to be the year of the biggest developments for the LLM field. However, after Claude 2’s entry into the game in July, the key developers – OpenAI, Google, Antrophic, Microsoft - have turned their attention to the issues of performance fine-tuning, the safety of the models, and data privacy. Their recently formed alliance – the Frontier Model Forum – focuses on the safe uses of LLM-based tools and the further advancement of the field. New massive model announcements have been lacking in recent months. We have now entered the next stage of the LLM revolution, and few individuals know for certain what is around the corner.