Image Source: Antipodespress.com

Generative AI technologies’ sophistication has reached levels where mere human observation or oversight is no longer enough to spot occurrences of AI content produced for malicious purposes. By now, robust AI systems are required to detect the avalanche of content produced using generative AI, as this content might be used to deceive the public, affect financial markets, influence elections, or advance dubious causes.

When AI Fails to Deceive

AI-generated (also known as “synthetic”) content on social media and elsewhere on the internet is easy to spot in certain cases. Often, this boils down to simple fact-checking and verification through multiple trustable sources. Last May, an AI-generated image of the Pentagon explosion was circulated on Twitter, sowing quite a panic. The fake nature of the image was quickly established, as multiple news agencies reported that the Pentagon was safe and sound. Despite the swift debunking, the image managed to cause a short-lived dip in the US stock market.

In other cases, the synthetic nature of content becomes clear due to obvious giveaways and blunders that generative AI tools are prone to. In June, the office of Toronto mayoral candidate Anthony Furey circulated a campaign image that depicts a woman with three arms.

Naturally, Mr. Furey wasn’t promising his electorate additional limbs as part of the election campaign. The AI-generated blunder was quickly spotted on social media and caused some embarrassment for the candidate.

Unfortunately, relying on AI tools making obvious blunders and conducting quick fact-checking are far from enough as part of a sound malicious synthetic content detection strategy. Researchers around the world are busy working on systems and models that will help fight deepfakes, fake news, fake social media content, and other forms of malicious AI content. Three major areas within this field are deepfake detection, content provenance, and bot detection.

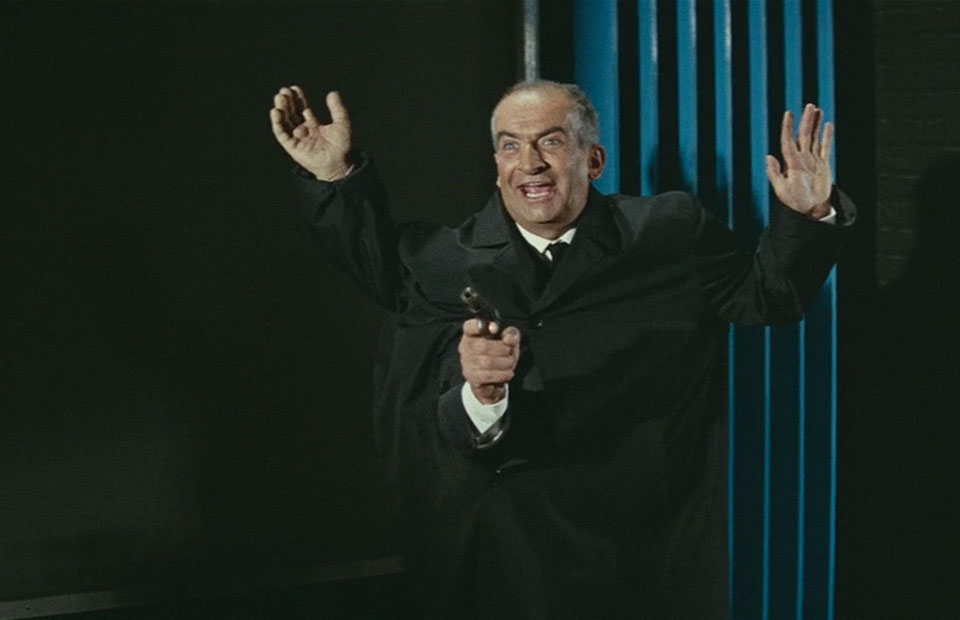

Deepfake Detection

Deepfakes are AI-generated videos, images, or audio clips that manipulate existing content by superimposing or replacing the original face or voice with someone else's, making it appear as if the subject is saying or doing things they never actually did.

Deepfake detection via AI often involves training models to recognise inconsistencies in shading, pixelation, or other visual content elements. As deepfake generators aren’t (yet) able to produce images or videos that replicate the original with 100% accuracy, deepfake detection AI systems aim to identify these imperfections.

Among the large tech players, Intel and Meta are particularly active in R&D activities related to deepfake detection.

Content Provenance

Content provenance is a framework which can be used to quickly and automatically establish the authenticity of any piece of online content. It represents a major opportunity to fight maliciously-generated synthetic content. Content provenance is an emerging area, and established provenance systems are currently lacking. Thus, it’s more of a concept than a defined realisation. Adobe, with its Content Authenticity Initiative, is at the forefront of work in this direction.

This is an area where blockchain technology might have major use cases. As blockchain is a trustless, cryptographically protected, transparent ledger, it could act as a backbone for content provenance systems.

For content provenance to work effectively, robust AI systems that will easily track and match synthetic content pieces to claimed originals will be required.

Bot Detection

Detecting bots, particularly on social media, is among the most effective ways to fight malicious synthetic content. Such content is rarely generated by humans. Individuals with sinister intents normally setup bots or bot networks to spread and amplify their synthetically produced content.

Thus, the vast majority, though of course not all, of AI-generated content that aims to disinform or sow chaos can be netted using bot detection AI algorithms.

We, at ZENPULSAR, are at the forefront of R&D in this area. Our AI models trained to detect bots on social media already achieve between 95% and 98% detection rates, depending on a specific social media platform. By maintaining these detection rates, we guard our social sentiment tools, datasets, and users against the vast majority of the malicious synthetic content that floats around on social media.

Deepfake recognition systems, content provenance, and bot detection are some of the major areas of R&D to fight the already disturbing onslaught of malicious synthetic content online. As generative AI technologies continue to evolve, expect this problem to deepen. For AI researchers and developers, this is a major challenge and an opportunity to protect humanity from the devastating effects of malicious AI-produced content – manipulated elections, financial markets in chaos, loss of trust in public institutions, and even the inability to detect if you’re indeed talking on the phone or via video with your family member or an AI impersonator.

Generative AI technologies’ sophistication has reached levels where mere human observation or oversight is no longer enough to spot occurrences of AI content produced for malicious purposes. By now, robust AI systems are required to detect the avalanche of content produced using generative AI, as this content might be used to deceive the public, affect financial markets, influence elections, or advance dubious causes.

When AI Fails to Deceive

AI-generated (also known as “synthetic”) content on social media and elsewhere on the internet is easy to spot in certain cases. Often, this boils down to simple fact-checking and verification through multiple trustable sources. Last May, an AI-generated image of the Pentagon explosion was circulated on Twitter, sowing quite a panic. The fake nature of the image was quickly established, as multiple news agencies reported that the Pentagon was safe and sound. Despite the swift debunking, the image managed to cause a short-lived dip in the US stock market.

In other cases, the synthetic nature of content becomes clear due to obvious giveaways and blunders that generative AI tools are prone to. In June, the office of Toronto mayoral candidate Anthony Furey circulated a campaign image that depicts a woman with three arms.

Naturally, Mr. Furey wasn’t promising his electorate additional limbs as part of the election campaign. The AI-generated blunder was quickly spotted on social media and caused some embarrassment for the candidate.

Unfortunately, relying on AI tools making obvious blunders and conducting quick fact-checking are far from enough as part of a sound malicious synthetic content detection strategy. Researchers around the world are busy working on systems and models that will help fight deepfakes, fake news, fake social media content, and other forms of malicious AI content. Three major areas within this field are deepfake detection, content provenance, and bot detection.

Deepfake Detection

Deepfakes are AI-generated videos, images, or audio clips that manipulate existing content by superimposing or replacing the original face or voice with someone else's, making it appear as if the subject is saying or doing things they never actually did.

Deepfake detection via AI often involves training models to recognise inconsistencies in shading, pixelation, or other visual content elements. As deepfake generators aren’t (yet) able to produce images or videos that replicate the original with 100% accuracy, deepfake detection AI systems aim to identify these imperfections.

Among the large tech players, Intel and Meta are particularly active in R&D activities related to deepfake detection.

Content Provenance

Content provenance is a framework which can be used to quickly and automatically establish the authenticity of any piece of online content. It represents a major opportunity to fight maliciously-generated synthetic content. Content provenance is an emerging area, and established provenance systems are currently lacking. Thus, it’s more of a concept than a defined realisation. Adobe, with its Content Authenticity Initiative, is at the forefront of work in this direction.

This is an area where blockchain technology might have major use cases. As blockchain is a trustless, cryptographically protected, transparent ledger, it could act as a backbone for content provenance systems.

For content provenance to work effectively, robust AI systems that will easily track and match synthetic content pieces to claimed originals will be required.

Bot Detection

Detecting bots, particularly on social media, is among the most effective ways to fight malicious synthetic content. Such content is rarely generated by humans. Individuals with sinister intents normally setup bots or bot networks to spread and amplify their synthetically produced content.

Thus, the vast majority, though of course not all, of AI-generated content that aims to disinform or sow chaos can be netted using bot detection AI algorithms.

We, at ZENPULSAR, are at the forefront of R&D in this area. Our AI models trained to detect bots on social media already achieve between 95% and 98% detection rates, depending on a specific social media platform. By maintaining these detection rates, we guard our social sentiment tools, datasets, and users against the vast majority of the malicious synthetic content that floats around on social media.

Deepfake recognition systems, content provenance, and bot detection are some of the major areas of R&D to fight the already disturbing onslaught of malicious synthetic content online. As generative AI technologies continue to evolve, expect this problem to deepen. For AI researchers and developers, this is a major challenge and an opportunity to protect humanity from the devastating effects of malicious AI-produced content – manipulated elections, financial markets in chaos, loss of trust in public institutions, and even the inability to detect if you’re indeed talking on the phone or via video with your family member or an AI impersonator.